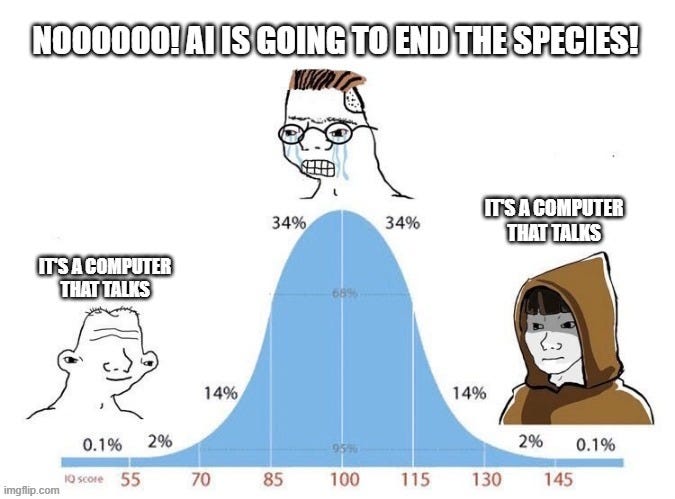

AI doomers and financial permabears: the same brand of pessimist

Some are genuinely well meaning, most are shilling fear to sell newsletters, consulting services or get attention. It's just human nature...

Intro: If you’re reading this post, congrats, we woke up again, meaning the AI doomers are still wrong, humans are still here. They can argue with themselves forever (or, more to the point: spread fear, just like the financial permabears do to sell newsletters, subscriptions, consulting, etc). They’ll say they’re never ‘wrong’ as existential destruction will always be ‘just around the corner’ with both groups. Punting will be forever. A few are well meaning and pretty balanced like my friend Liron, who I had on a podcast episode and shares sober thoughts to consider. But, many are hucksters, preying on fear, or simply on the team of regulatory capture and control. Tale as old as time…

"The only thing we have to fear is the fear of change itself." — Seth Godin

In the world of technology and finance, there are two groups of people who tend to make sweeping and pessimistic predictions about the future: AI ‘doomers’ and Financial ‘permabears.’ AI doomers are individuals who believe that artificial intelligence (AI) will inevitably lead to the downfall of humanity, while financial permabears are those who perpetually predict financial markets are headed for a catastrophic crash. These two groups share more in common than one might think, and, while there are reasons we should listen to (some of these) concerns, their extreme pessimism is broadly unfounded. Tech never really had much of an opportunity for a doom cult, but they were primed by years of dystopian sci-fi (Hollywood unconsciously hates tech).

Pessimism always sounds smart and optimism always seems misguided. It’s just how we’re wired. I’ve written on permabears and permabulls before, worth reading that as well. But I think the AI doomers are basically usurped by the same mentality. A couple smart (and they are smart) people convinced of something are quite persuasive. But this pattern repeats throughout history (they’ll say “this time it’s different” because everyone thinks their time is special). A lot of you know this story well but let’s go through it for those who don’t, so you’re primed for the discussion when it gets to you.

Basics on the doomers (vastly oversimplifying all this, but that’s all we need here, there’s no reason not to immunize yourself from the doom-pills)

Fear of unprecedented change

AI doomers fear that advanced AI systems will become uncontrollable and potentially hostile, leading to a dystopian future where machines dominate or eliminate humans.

Financial permabears predict that markets will crash, wiping out wealth and causing economic collapse. They tend to focus on worst-case scenarios, ignoring the resiliency and adaptability of markets.

Fiction over reality

AI doomsayers overlook the fact that AI technology is developed and used by humans. Responsible AI development includes ethical considerations and safeguards to prevent unintended consequences. It’s also just not as powerful as a few philosophy nerds think, and is not going to one day self-actualize and decide to start running amok. They’re always dreaming of future technologies that don’t even exist. Also, consciousness is not a computation.

Financial permabears often disregard the fundamental strengths and dynamics of markets, which have historically shown resilience, adaptability, and the ability to recover from downturns.

Ignoring progress

While some concerns about AI ‘safety’ are valid, there’s significant work on AI ethics, regulation, and responsible development. The AI community actively works to address risks and challenges, like anything else. I don’t think the risks here are different than any other consumer software development, which already uses plenty of ‘smart’ software. Note larger entities here love playing up the risks as in theory this could let them be the ones to guide regulation and shore up their moats. We’ll talk about this more shortly.

Financial markets have experienced numerous crises throughout history, but they have consistently recovered and grown in the long run. Ignoring this historical context can lead to erroneous predictions. It’s really the same as the people who hated nuclear and would prefer we continue to run coal everywhere. These people all just dislike progress. And that’s not to say we couldn’t listen to people with new ideas, but we should probably ignore the folk who simply wish society to go backwards. They need psychiatric help, honestly, no other way to say it.

Overlooking benefits

AI has the potential to bring about significant benefits, from improved healthcare and transportation to solving complex global challenges. Ignoring these positive aspects of AI paints an incomplete picture. And I’m sorry to kill the magic here, despite people sharing fancy graphs and technically complex language, it’s just more software. I’ve worked in software and marketing since the 90s and it’s always been this way. People will argue ‘this time it’s different’ or the products are special somehow but I don’t buy it. Always more manias. The cycle continues.

Predicting perpetual market crashes ignores the fact that financial markets play a crucial role in funding innovation, driving economic growth, and creating wealth for individuals and societies. Of course there are crashes, because we’re still figuring things out, but we’re not going to a Mad Max-type scenario. We can delineate between normal bearish humans in the short term from ZeroHedge-esque sellers of fearporn.

Confirmation bias

Both groups may suffer from confirmation bias, seeking out information and anecdotes that support their pessimistic views while downplaying or ignoring evidence to the contrary. I think they’ve heard one too many end of world bear cases or stories and now it’s etched in their brain. So instead of actually doing something about it they go to the town square to share the world is ending. Doesn’t strike me as productive behavior, rather it’s quite Malthusian.

De-growthers and monopolists really both want the same thing: control

While some of the concerns about AI and financial market stability are valid for some of the people building these systems, extreme pessimism from AI doomers and financial permabears for most of us is counterproductive. Lots of people pushing this on retail are essentially ‘decels’ or ‘de-growthers’ and ultimately cause more pain than they think they prevent. Some go so far as to proclaim this the largest threat to humanity, which could be true from a narrative perspective. Both groups underestimate human adaptability, innovation, and the ability to address challenges. Decels use bureaucracy as a weapon camouflaged as virtue (but ultimately harm us, such as stopping nuclear - green, elemental energy - causing places like Germany to turn on more coal which actually harms the environment). Large organizations love bureaucracy as it shores up their moat and they can raise prices. The insurance firms were invited to write the ACA framework, with predictable results.

I also highly recommend watching this talk with Bill Gurley on why Silicon Valley is so successful and on the regulatory capture being what hamstrings growth.

Really, the de-growthers should have gone to Hollywood to be fiction writers or into some creative field, but the market mostly doesn’t value such work, so we’re stuck with them in the business realm. They’re always entertaining. I just don’t believe them. They’ll always say doom is just around the corner, on a long enough timeline, or just throw whatever ad-hominem arguments at people who don’t buy what they’re selling. No upside for us to listen, we didn’t let the same people stop us from developing human flight or any of the other marvels we have today. We should ignore them and keep building.

We badly don’t need nanny state bureaucrats overlooking code which is in effect another form of free speech. Open source is the way. Some people want an ‘FDA but for tech’ and I can’t think of anything more dystopian. It is clear none of these people have ever worked in biotech/regulatory captured sector or they would know better.

Wrapping up, if you want to be worried about something, geopolitical risk, which will only continue to rear its head, is what we should actually lose sleep over. Anyway, as I said on Twitter/X, remember "AI" is just fancy software, it's not intelligent and we should stop anthropomorphizing it.

Take everything with a grain of salt, these days, a few grains. I’m not as down on the doomsayers as they can make some very good points and while I believe there is nothing wrong in prepping for a rainy day I also don’t put all my eggs in that one basket.

I like this. I don't know that I like the idea of no regulation, that we can just rely on the developers and business to guide it in the right way. I'm super thankful for the ethicists that work on it. That said, I'll investigate my own opinions on this further. As usual, thanks!