Where does AI actually make sense?

Someone asked me this question, so let's discuss it

I’ve been critical of AI used for slop and of how it risks making people fatted and lazy. That said, I’m not a luddite and like technology used thoughtfully, by people who consider tradeoffs, craft, and are relentlessly passionate about the end product being uniquely theirs. Anyway, what I don’t see is much discussion about when AI is actually appropriate, or when you should skip it. I personally think this is obvious but someone asked me to put down thoughts, so let’s talk about it more.

Put simply: if you don’t care about the details, AI could be great. That’s the tradeoff. Your level of care is directly proportional to how much the details matter. This is why AI works well for things like memes, throwaway content meant to be consumed and forgotten within a day. The fast food analogy applies nicely here. Note that many people are spiritually obese, consuming and creating nothing but this as their entire creative diet. To them, anything with depth or character might make their nihilism apparent, thus it’s frightening and to be made fun of (this is textbook demoralization).

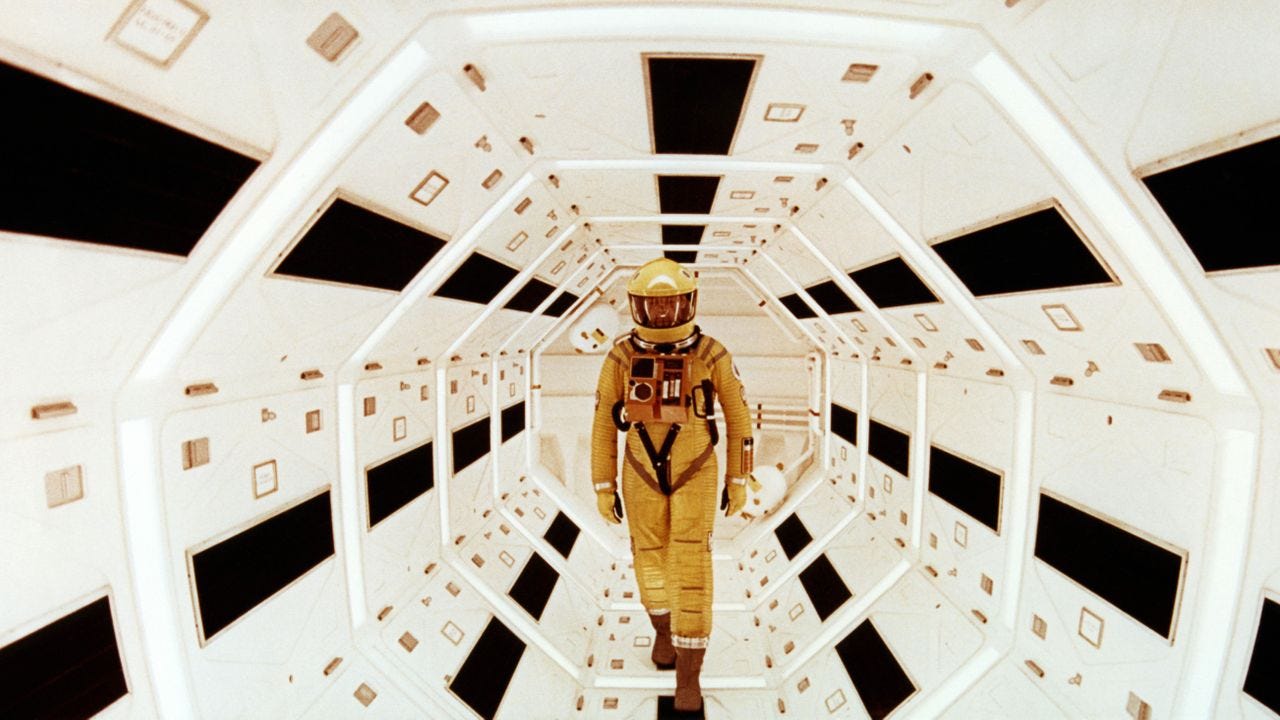

There are also practical cases where AI makes sense. Sometimes, you’re simply not resourced to do something you’d like. Take an indie filmmaker who can’t afford to shoot their own B-roll. AI might generate a “good enough” version to fill that gap. Plenty of great films were made on shoestring budgets, Primer or Napoleon Dynamite come to mind. If the story is creative, I wouldn’t mentally dock the artist points for using AI. They’re trying, working with what they have. You can almost always tell when someone’s heart is still in the work even if they had to do something scrappy. I do think in film the less it seems the work was overly processed and generated the better the final product, and I’d almost always rather trade visual effects for something told more low fi, although there’s some exceptions.

The same goes for writers. Sometimes you need an image to go with your story. A generated visual can serve the same illustrative role as stock art. I’ve done this myself, for instance, I created an AI image for my post about TikTok and Instagram as intellectual poison, picturing two people at a café staring at their phones instead of talking. It worked fine. In such cases, AI is no different from buying a stock photo. It’s a perfectly cromulent replacement (although I still prefer to use human-created visuals, on occasion I just can’t find one I like). Just mentally replace “generative” with “generic” and that’s basically what you’re getting.

Where AI also shines is in the repetitive, mechanical part of creative work: resizing banner ads, color-correcting photos, cutting reels, cleaning up podcast audio, transcribing, summarizing. These are tedious, technical tasks that benefit from speed, not soul. None of this work was ever all that creative anyway, you might have already even outsourced it if you had the budget. And most of this work involves handling tasks after something human was already created.

Where AI isn’t interesting to me at all? Music. I don’t want stock music, I want this form of art made with intention, care, and meaning in each moment. Music matters deeply to me, and there’s already more great human-made work out there than anyone could ever finish listening to in one lifetime. There’s no time for slop. I personally don’t consider music as just a “placeholder” in my life, it’s much more important than that.

I wouldn’t want an AI-generated picture hanging in my living room, either, even if I would use one in something like a work presentation or meme. Stock art feels wrong as a substitute for a cultural artifact with real context and soul. I want to be connected to the story and the artist and be able to talk about that connection with guests. Leaving the stock photo of the smiling family or the generic sunset in a Target picture frame feels sociopathic. Listening to AI music feels similarly hollow.

And besides, words are a terrible way to describe music. We already have a far more precise and efficient interface: MIDI. It takes me less time to play or even click in a chord progression than to prompt, wait for an AI to render something, and then re-prompt endlessly trying to get the sound I want. Using an AI to generate specific sounds for a sampler would be a good use case I can think of, because here it’s simply giving me clay to work with. But language is a poor interface for making entire music pieces. I don’t want to prompt full songs, or listen to what you prompted. I don’t want a robot to go to the gym for me or take my wife to dinner, either.

Another useful framing: you can think of AI as an executive assistant. A top professional might rely on one to handle research, organization, scheduling studio time. But they wouldn’t delegate creative direction, thesis development, or decision-making. Once you do, the work is no longer yours, you’ve decided not to care about the details. And if you don’t care, why even bother?

Learning is another area where it is probably unwise to use AI too soon. A pilot doesn’t start on a 747, they start on a Cessna. You have to learn to fly something small before wholesale trusting autopilot with passengers, because one day it will fail, and in that moment, (understanding) fundamentals are existentially important. The same principle applies to any craft. If you never learned to think, write, design, or compose by hand, you’ll never advance beyond the role of passenger. You might be performatively productive, but you’re not really flying the plane. True mastery comes from knowing how the controls work when the assistance stops or encounters a novel situation. And anyway, no one spends their weekends flying recreationally without flying the plane themselves. If you understand this last paragraph, a lot more of the AI and creative discussion makes sense, and you can see where some people are lost.

Finally, AI might help finish work when you’re stuck. Sometimes there’s enough there for the machine to plausibly predict the next step. But even then, you’ll need to edit heavily. For something like writer’s block, that might be fine. Editing could be easier than starting. But in my experience, it takes a lot of effort to wrangle something into my voice. Then again, if you have no voice, if you are just doing something on a soulless assembly line, perhaps AI is really useful. But in a sea of infinite ideas competing for attention, I just don’t see how that makes it very far.

To wrap, I don’t think you even have the so called ‘discernment in taste’ everyone seems to want if it’s not apparent to you when to use automation or different tools in your creative work. It’s not that hard to know. So I also don’t think the AI permabulls or permabears actually create anything, because they don’t get the nuance. Both sides are wrong. The reality of where AI (really just automation) will sit is somewhere in the middle, and when you use it there will always be some kind of tradeoff. Where you are okay with it says a lot about you (and likely determines if your work is timeless or not).

Good article. I recently wrote a piece that echoes some of themes.

AI is most useful when it's taking away boring drudge work, freeing up people's energy and creativity for the good stuff.

I don't use it much directly for creative output, but I do find it helpful as a thought partner or editor. Sometimes I will ask the AI to steelman an argument, critique my position and help me evaluate my it versus contrary ones. AI can also be a helpful copy editor, suggest ways to tighten up my language or give me alternative ways of phrasing something. I prefer to have it make suggestions that I implement though, rather than asking it to do the work. That way what I end up producing reflects my voice vs that of the AI.

Fun article. I have the same question, but a different perspective. I think AI is in heavy use in music right now by all aging artists performing in arenas. That AI makes them sound 20 years younger and the fans couldn't care less-- they sound great.

I agree with you on creativity, but find it more useful to think of human brains as the most important muscle in the body-- and (just like any muscle) needs regular exercise to maintain strength. If we're not careful, AI will do to the our brains what car culture and desk jobs did to our bodies.